Calling C functions, R v.s. Python

December 16, 2010

Take a look on the following simple C function:

double triplesum(double a, double b, double c) {

return a + b + c;

}

And we want to use it in Python:

>>> from ctypes import *

>>> lib1 = cdll.LoadLibrary('triplesum.so')

>>> lib1.triplesum.argtypes = [c_double, c_double, c_double]

>>> lib1.triplesum.restype = c_double

>>> lib1.triplesum(1.2, 3.4, 2.3)

6.8999999999999995

In R:

C code:

void triplesum(double* a, double* b, double* c, double* d) {

*d = *a + *b + *c;

}

Compile it by

$ R CMD SHLIB forR.c

R code:

> dyn.load("forR.so")

> a <- 1.2; b <- 4.3; c <- 2.3; d .C("triplesum", a, b, c, d)[[4]]

[1] 6.9

It’s hard to say which one is more convenient, but Python seems better because you don’t need to modify the code.

R‘s mechanism makes me feel like writing Fortran subroutine but in C syntax.

MathJax works!

August 8, 2010

Webkit Mathml: err… a long way to go.

November 13, 2009

The last WebKit truck have the code for MathML, er, primary support.

It seems that WebKit only speaks and recognizes at this moment.

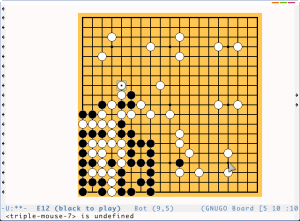

Fun with GnuGo in Emacs

November 7, 2009

Put gnugo.el and gnugo-xpms.el into your ~/.emacs.d/gnugo and put the following lines into your ~/.emacs

(add-to-list 'load-path (expand-file-name "~/.emacs.d/gnugo")) (add-to-list 'load-path (expand-file-name "~/.emacs.d/gnugo/mokuxpms")) (require 'gnugo-xpms) (require 'gnugo)

A test: Online equation esitor.

October 28, 2009

\[ \int_{-\infty}^\infty e^{-x^2}dx = \sqrt{\pi}\]

我這輩子看過最威的學術期刊文章標題

October 26, 2009

哥大經濟系 Sala-I-Martin 教授的大作1:

Xavier Sala-I-Martin, I just ran two million regressions , The American Economic Review, 1997, vol. 87, no.2, p.178-183.

R + OpenMP

October 21, 2009

The following patch is corresponding to ROMP‘s Google code web site file:

16c16

`type` function `name`(`arg.str`)

48c48

`type` function `name`(`arg.str`)

78c78

function `name`()

99c99

integer function ifelse(cond,x,y)

132c132

# system("gfortran -dynamiclib -O3 main.f90 -o main.so")

134c134

system("gfortran -fopenmp -lpthread -shared -O3 main.f90 -o main.so -fPIC")

157c157

NTHREADS=2

The result is amazing:

> source("/home/gongyi/Desktop/romp/Romp_01a.R")

[1] "times for pureR"

user system elapsed

3.584 0.268 3.905

[1] "times for Romp"

user system elapsed

0.060 0.004 0.051

Another OpenMP test: rand()

October 20, 2009

I use the following code (with slight modification from the last post):

#include <stdio.h>

#include <omp.h>

#include <stdlib.h>

int main(int argc, char **argv) {

const int N = 1000000;

int i = 0;

double a[N];

#pragma omp parallel for

for (i = 0; i < N; i++)

a[i] = ((double) rand())/((double) N);

#ifdef PRINTING

#pragma omp parallel for

for (i = 0; i < N; i++)

printf("%e\n", a[i]);

#endif

return 0;

}

The result is quite interesting:

gliao@mcstat5:~/Archives/Programming/C/Lab/OpenMP$ gcc -Wall testOpenMP.c -o testwoomp testOpenMP.c: In function ‘main’: testOpenMP.c:10: warning: ignoring #pragma omp parallel gliao@mcstat5:~/Archives/Programming/C/Lab/OpenMP$ gcc -Wall -fopenmp -DOMPE testOpenMP.c -o testwomp gliao@mcstat5:~/Archives/Programming/C/Lab/OpenMP$ time ./testwoomp real 0m0.037s user 0m0.036s sys 0m0.000s gliao@mcstat5:~/Archives/Programming/C/Lab/OpenMP$ time ./testwomp real 0m0.411s user 0m0.072s sys 0m0.408s

Note: I run the program on a Core 2 Quad machine.

How Nerd I Am?

October 19, 2009

如何用GotoBLAS2 取代Debian/Ubuntu內建的libblas.so.3gf

October 7, 2009

Debian/Ubuntu 內建(意即可以在不修改/etc/apt/sources.list的情況下, 透過apt-get install安裝的套件) 的 libblas3gf 及 liblapack3gf 都是NetLib所提供, 未經最佳化的reference implementation(參考實作), 其速度並不是很能讓人滿意。

GotoBLAS2

是由經歷略帶傳奇色彩的日藉工程師後藤和茂所開發的BLAS實作。 跟另一個著名的BLAS實作ATLAS相較之下, GotoBLAS2最大的特點就是包括了大量的組合語言碼用作最底層的最佳化 (ATLAS則是用完全不同的方式進行最佳化,請參考ATLAS的網頁), 在我的測試環境中,GotoBLAS永遠優於ATLAS.

後藤先生服務的美國Texas大學Austin分校的先進計算中心最近釋出了GotoBLAS2的最新版本, 版次為1.04 這個最新版本已經完全支援Lapack3.1.1。 意即可以以Gotoblas2作為基礎, 編譯出完整的BLAS+LAPACK的單一shared object(在UNIX上是*.so在Windows則是*.dll). 相較參考實作, GotoBLAS最明顯的優勢便是支援多執行緒(multithreading), 在今日多核CPU已成為主流的情形下, 仍然使用不支援多執行緒的BLAS實屬不智。

以下簡介如何從無到有編繹GotoBLAS2並取代內建的libblas3gf及liblapack3gf:

Step 1:

到UT Austion TACC下載GotoBLAS2( 需註冊)

Step 2:

安裝必要的編譯工具:

sudo apt-get install build-essential gfortran libgomp1

其中libgomp是GCC的OpenMP的支援實作。

Step 3:

修改Makefile.rule:

# # Beginning of user configuration # # This library's version VERSION = 1.04 # You can specify the target architecture, otherwise it's # automatically detected. # TARGET = NEHALEM # If you want to support multiple architecture in one binary # DYNAMIC_ARCH = 1 # C compiler including binary type(32bit / 64bit). Default is gcc. # Don't use Intel Compiler or PGI, it won't generate right codes as I expect. CC = gcc # Fortran compiler. Default is g77. FC = gfortran # Even you can specify cross compiler # CC = x86_64-pc-mingw32-gcc # FC = x86_64-pc-mingw32-gfortran # If you need 32bit binary, define BINARY=32, otherwise define BINARY=64 BINARY=64 # About threaded BLAS. It will be automatically detected if you don't # specify it. # For force setting for single threaded, specify USE_THREAD = 0 # For force setting for multi threaded, specify USE_THREAD = 1 USE_THREAD = 1 # You can define maximum number of threads. Basically it should be # less than actual number of cores. If you don't specify one, it's # automatically detected by the the script. # NUM_THREADS = 16 # If you're going to use this library with OpenMP, please comment it out. USE_OPENMP = 1 # If you don't need CBLAS interface, please comment it in. # NO_CBLAS = 1 # If you want to use legacy threaded Level 3 implementation. # USE_SIMPLE_THREADED_LEVEL3 = 1 # If you want to drive whole 64bit region by BLAS. Not all Fortran # compiler supports this. It's safe to keep comment it out if you # are not sure(equivalent to "-i8" option). # INTERFACE64 = 1 # Unfortunately most of kernel won't give us high quality buffer. # BLAS tries to find the best region before entering main function, # but it will consume time. If you don't like it, you can disable one. # NO_WARMUP = 1 # If you want to disable CPU/Memory affinity on Linux. # NO_AFFINITY = 1 # If you would like to know minute performance report of GotoBLAS. # FUNCTION_PROFILE = 1 # Support for IEEE quad precision(it's *real* REAL*16)( under testing) # QUAD_PRECISION = 1 # Theads are still working for a while after finishing BLAS operation # to reduce thread activate/deactivate overhead. You can determine # time out to improve performance. This number should be from 4 to 30 # which corresponds to (1 << n) cycles. For example, if you set to 26, # thread will be running for (1 << 26) cycles(about 25ms on 3.0GHz # system). Also you can control this mumber by GOTO_THREAD_TIMEOUT # CCOMMON_OPT += -DTHREAD_TIMEOUT=26 # Using special device driver for mapping physically contigous memory # to the user space. If bigphysarea is enabled, it will use it. # DEVICEDRIVER_ALLOCATION = 1 # Common Optimization Flag; -O2 is enough. COMMON_OPT += -O2 # Profiling flags # COMMON_PROF = -pg # # End of user configuration #

然後在同一目錄下執行make 如果你的主機CPU是多核心的, 例如說雙核心, 可以用 make -j2, 若是四核心, 可用make -j4.

Step 4:

編譯完成後,建立另一個目錄, 並將原GotoBLAS2目錄下的libgoto2_core2p-r1.04.a複製到你新建的目錄下,並在新目錄下執行:

$ ar x libgoto2_core2p-r1.04.a $ gfortran -lgomp -lpthread -shared -Wl,-soname,libblas.so.3gf -o libblas.so.3gf.0 *.o $ gfortran -lgomp -lpthread -shared -Wl,-soname,liblapack.so.3gf -o liblapack.so.3gf.0 *.o $ sudo apt-get install liblapack3gf $ sudo mv /usr/lib/libblas.so.3gf.0 /opt/libblas.so.3gf.0.orig $ sudo cp libblas.so.3gf.0 /usr/lib/. $ sudo mv /usr/lib/liblapack.so.3gf.0 /opt/liblapack.so.3gf.0.orig $ sudo cp liblapack.so.3gf.0 /usr/lib/. $ sudo ldconfig

大功告成!